Information Horizons

After the NSA, Facebook and Cambridge Analytica practices hit home, it’s understandable if you feel there’s nothing you can do to protect yourself. There is. I was a few years ahead in predicting these problems, and have since figured out some ways to protect myself.

Before we get into the practical, I cover some principles.

If we let them make us think we’re powerless, we become powerless

The first is recognising that if we allow ourselves to feel powerless, we become powerless.

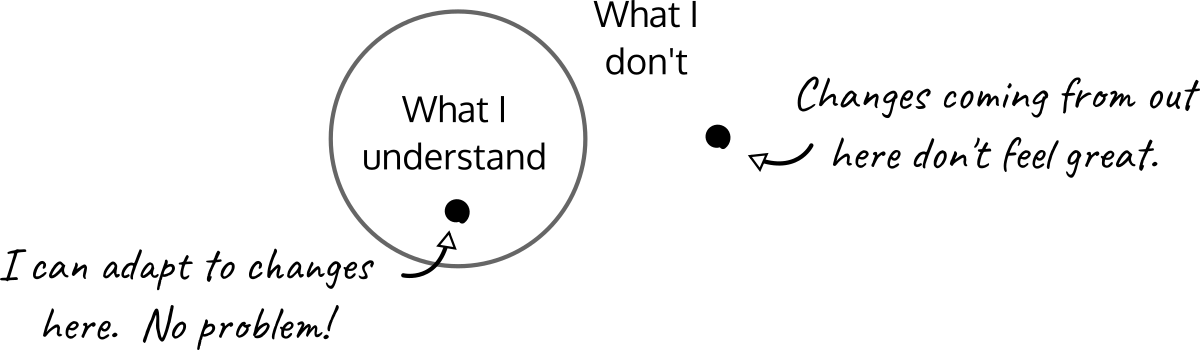

When we understand what’s going on around us, we feel in control. When some outside force that we don’t understand acts on us, we don’t feel in control.

“The greatest tool of the oppressor is the mind of the oppressed.” - Steve Biko, South African freedom fighter

Understanding what impacts us allows us to adapt. Even if we don’t need to adapt, not understanding it makes it feel more threatening.

So what happens when people somewhere else in our country, somewhere else in our world, affect us? For example, through military or economic means? If we understand what’s going on, we can adapt. If we don’t understand, we feel powerless.

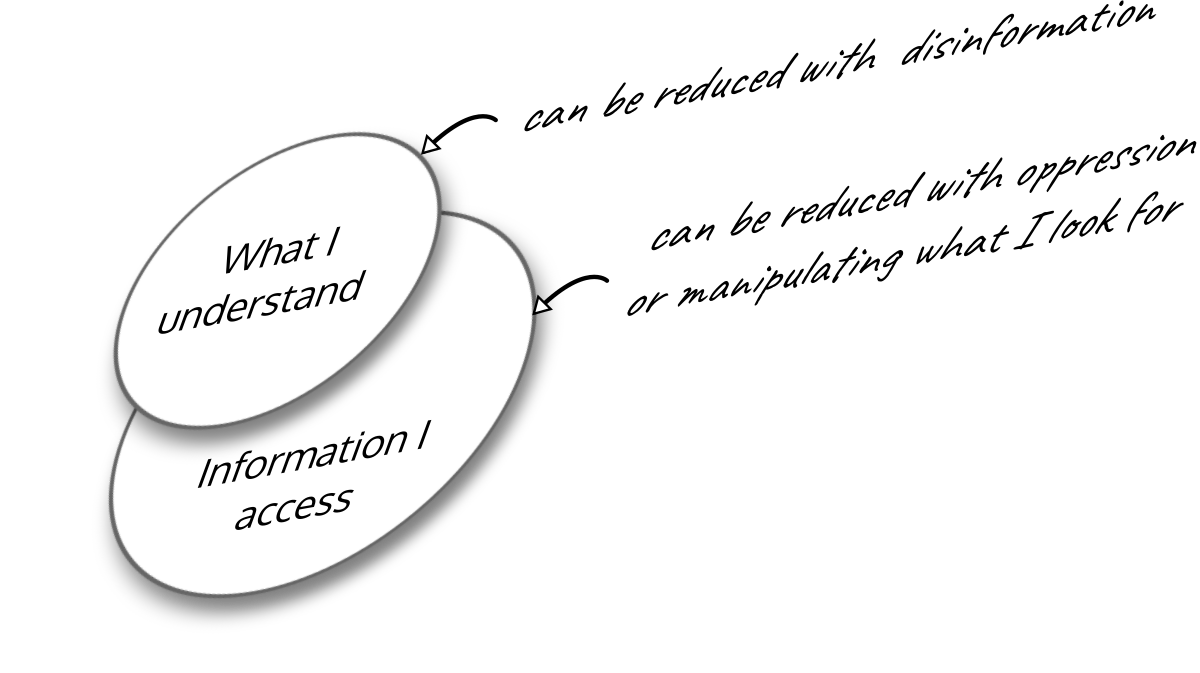

What we’re able to understand depends on what information we choose to access

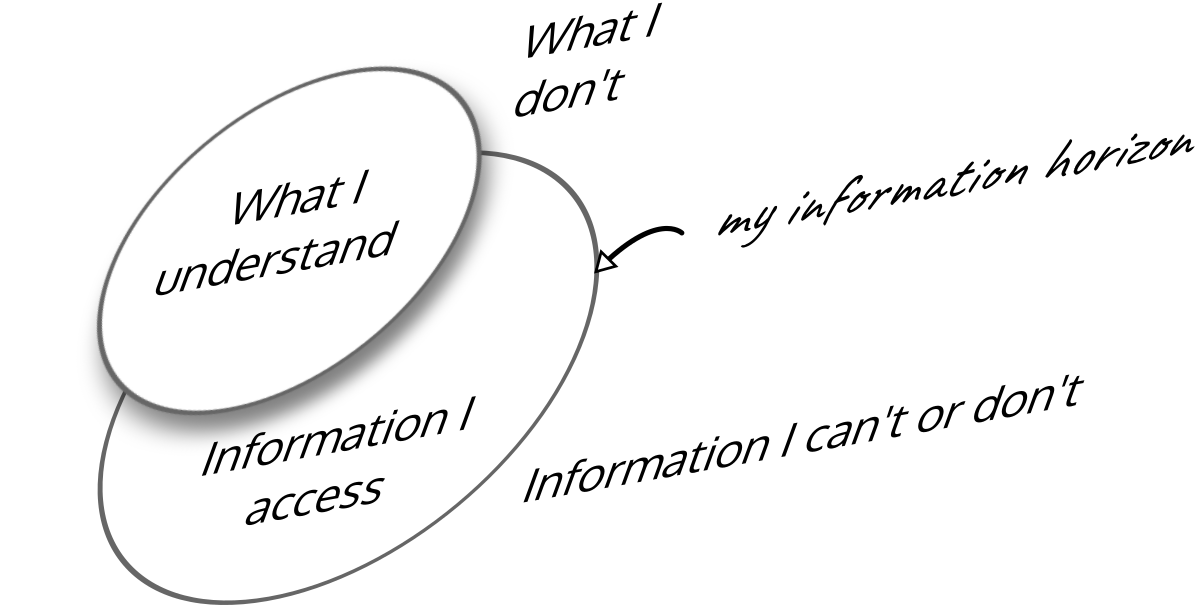

What we understand depends on our access to information.

I think of this in terms of Information Horizons.

The distance to which we can see and understand, our Information Horizon, gives us that familiarity. Whereas horizons are how far we can physically see, our Information Horizons are about what we can observe well enough to understand.

There are other people out there, in their own Information Horizons – and we affect each other.

But when something comes over the horizon that we didn’t expect and don’t understand – that’s often a problem.

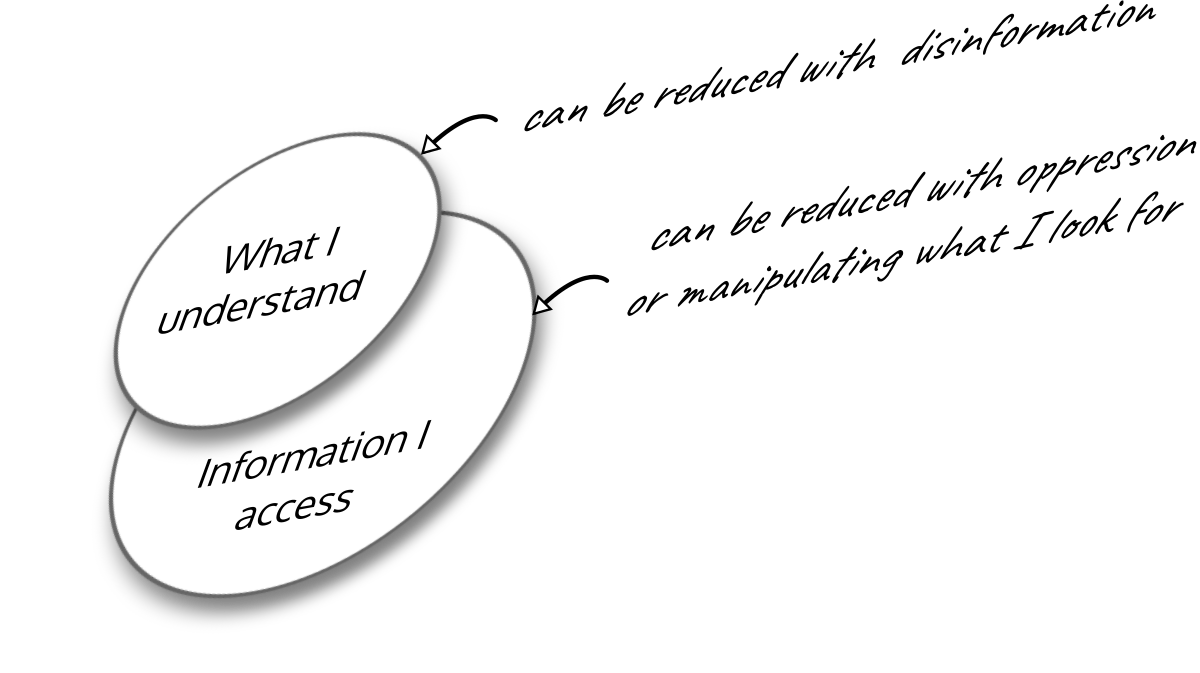

Propaganda and misinformation aren’t new. But these days, it’s far more possible to control opinions by restricting information through social media bubbles. Social media bubbles reduce what you can see because they isolate your attention to a small group of people, and use algorithms to filter out information based on commercial goals, not yours. This reduces your Information Horizon.

How much of what you feel could be improved if you could understand the people that affect you? How much of what you know is limited because you choose to pay attention to a small slice of information from a small group of people? Does this make sense in such a globally-connected world?

Now that a lot of us are experiencing this lack of control and visibility, we’re getting distraught. But we don’t have to be.

How we get controlled

Getting practical, if you want to change things, there’s a useful idea from the computer security world called Threat Modeling. The EFF has a nice guide if you want to get a into it, but the basic idea is that if you think about what an adversary wants from you, you can take steps to stop them.

A few years ago, most people worried about privacy were considered a little loopy. After all, what did we have to lose by giving up our private data to marketers or our governments? Even in the Infosec world, it was considered inappropriate to look at personal information as part of a threat model. It was more about protecting the hard data on your devices. But these days, when we look at what’s going on with Facebook, Trump, Brexit and Cambridge Analytica, it’s clear we have some important data to protect that’s not on our devices. we need to protect that data to protect our democratic rights and even our opinions.

We’re stuck in social media bubbles. Our Information Horizon is shrinking. Therefore, so is our understanding of what affects us, and along with it, our sense of control.

This is addressable.

We have control over what affects our own capacity for self-determination. Not just on an emotional level, but on a few more practical information level.

So, let me take you through a part of my Threat Model, with a view to protecting my ability to understand the world and what affects me.

My fundamental realisation is that even though I’m a nobody and no government would want to “spy” on me, there are political campaigns out there that screw with what people see and beleive, and I am on their list of their millions of targets.

So from a threat modeling perspective, I need to expect that adversaries are trying to reduce my Information Horizon and then counter-act them.

(Another part, a more classical threat model for data on my devices, is something I might share another time.)

Types of personal information

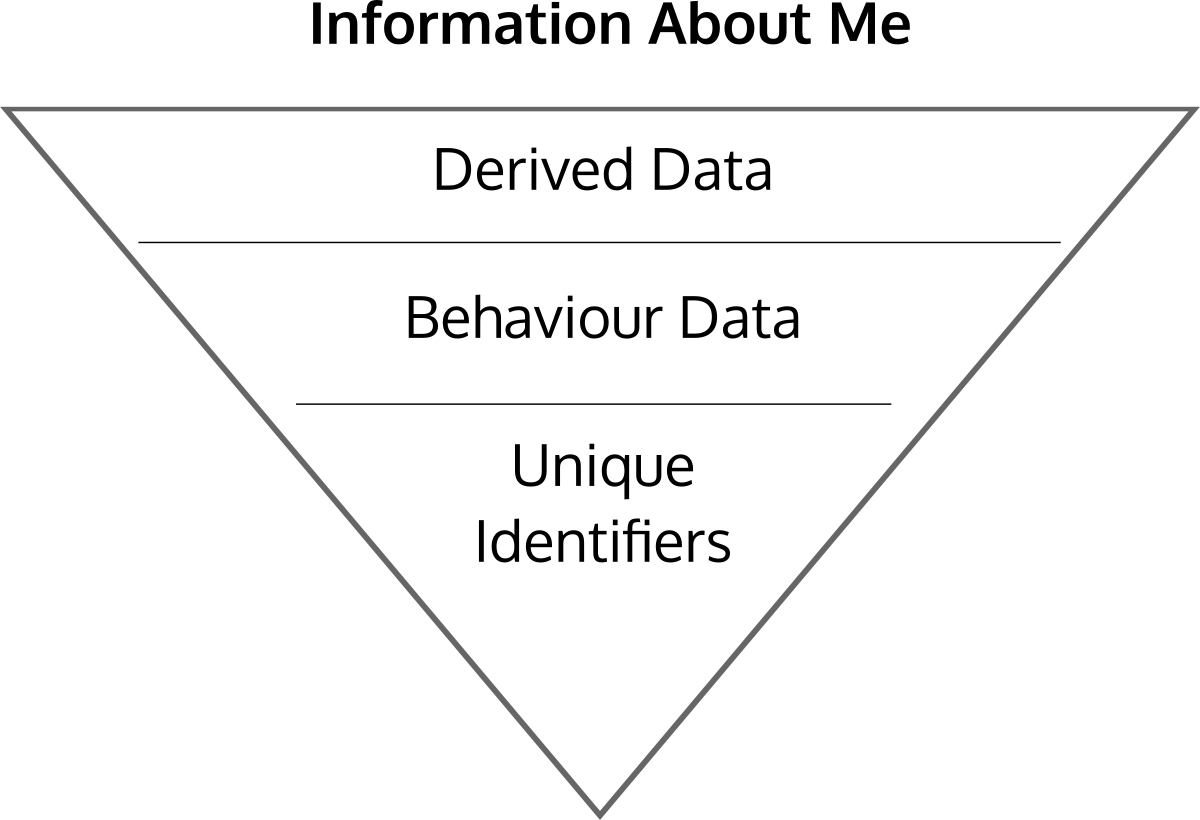

There are two types of information that control my Information Horizon: Information about me, and information that changes me.

Information about me

Unique Identifiers.

Firstly, there’s the direct data that uniquely identifies me: my name, address, phone number, IP address.

Behaviour data.

Then, there’s the data about me. My likes, my behaviours, who I communicate with and what we talk about, my whereabouts.

Derived data.

From that, statistical systems can work out my psychology, my intentions, my health, my politics, even into the future.

It’s important to recognise that each subsequent layer makes the last one no longer needed. So if someone has your Personal data, they don’t need Unique Identifiers from you anymore. (They can just match your patterns.) If they have Derived Data, they can delete your Personal Data and still know everything about you.

This is why Facebook experimented with turning on people’s microphones to listen in on them, but then found they could get the same Derived Data models through other means.

(They later said microphone activation was only for specific features, but they had already automatically turned on those features without consent.)

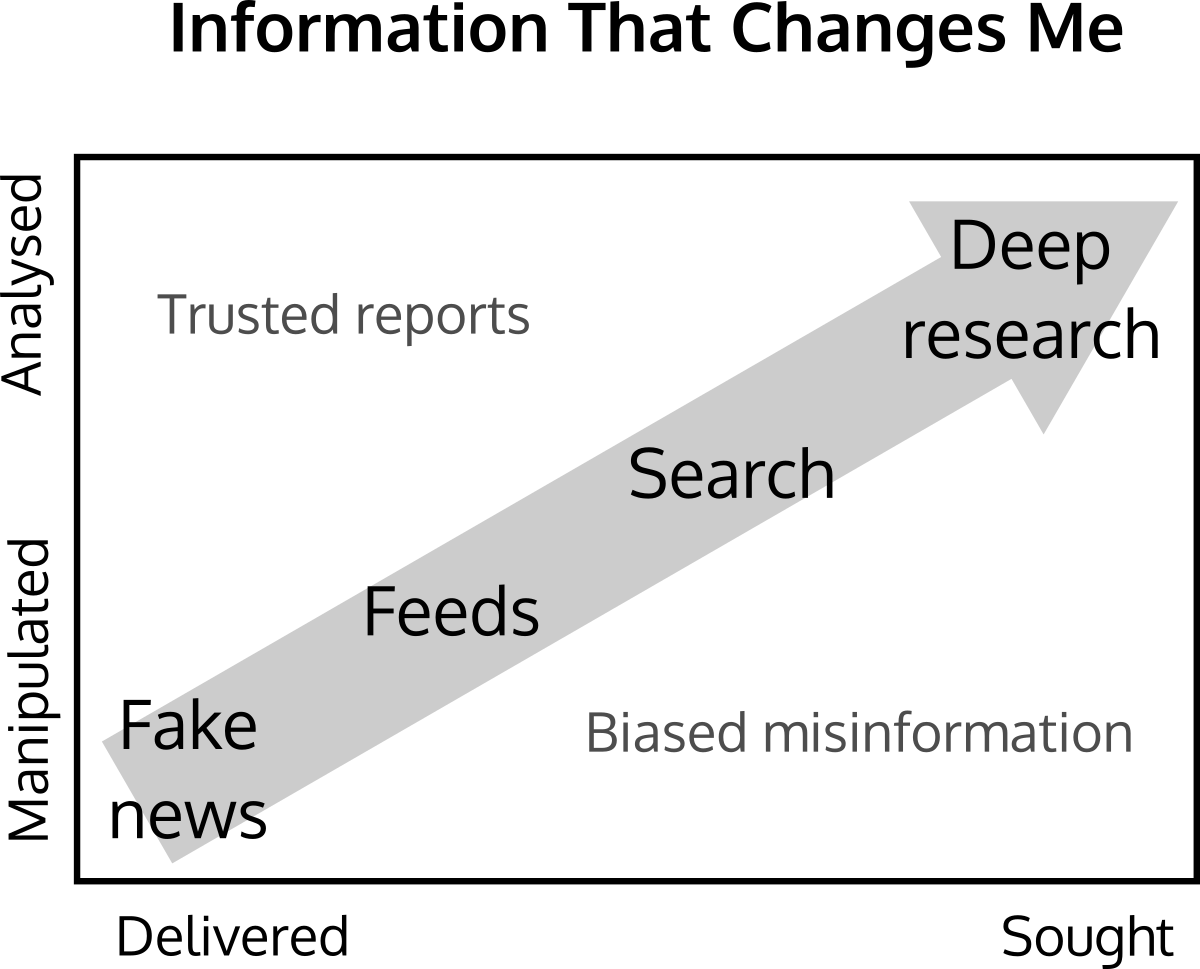

Information that changes me

On top of information about me, there’s information that changes me, in terms of manipulating my beliefs and behaviours.

Accessible Information.

There’s information I have access to. I can’t always get the answers I look for. There are filter bubbles even on search, and a lot of scientific knowledge is behind paywalls. And there’s lots of important information that’s not on the Internet.

Sought Information.

There are things I want to learn, news stories I want to research.

Delivered Information.

There’s information that’s placed where I pay attention. Stuff in various feeds, news on TV, opinions of friends.

Again, there’s a similar effect here. If an adversary can control what information you want, they don’t need to control what you have access to. If they can control what’s delivered to you, they can affect your opinion and control what you seek.

The general threat model

Information about me can be used to control what information I see. This is part of how our Information Horizons are reduced.

So now we get into how to fix the problem.

To improve my own visilibility of information, I need to:

Control my own habits, especially being careful about where I spend my attention and what information I consume.

Reduce the Derived Data models about me, since those are used to systematically target me and manipulate what information I consume.

Trading control for convenience

Let me ask you something. You’ve become used to a lot of conveniences - which are you willing to give up to regain control?

A lot of our “conveniences” aren’t actually more convenient, they’re just manipulations of our habits and emotional responses.

Facebook feels more convenient when you want to quickly send a message to someone, or see random updates from random friends. But is it convenient at the end of a few hours wasted? Is it really convenient against alternatives, like a checking a few news sites directly for world news, or sending a text message to say what’s up?

And there’s a huge upside in changing our habits - both politically and in feeling better. Just ask the people who do things like turn off notifications on their phone.

Change where you put your attention

One way is to shift your habits from Delivered Information to Sought Information. Delivered information relies on filters that we don’t control, and are actually quite crude and ineffective anyway. It might be nice that a system sort of understands what you like, but you know what you like way better.

Instead of Twitter feeds, pick a topic you care about and search regularly. You’ll be way more informed. Instead of Facebook feeds, reach out to people you care about and say hi. You’ll be way more in touch.

I learn a lot more about specific topics when I become proactive and roll up my sleeves. And it takes less time than reading all the linkbait shit that steals my attention. Twitter makes me feel on the pulse, but switching to Youtube or DuckDuckGo gives me way more depth and practical knowledge in way less time.

Uninstalling apps that have feeds from your phone is a nice boost without any real cost. I can still chat on your phone, and my feeds are on my computer, never far. But I have so much more time to process what I learn, and when I’m on my phone, I’m not mindless scrolling, I’m reading an ebook I downloaded, or studying with memory cards, or searching to answer a question I’ve been mulling.

Broaden your sources

We feel comfortable among like-minded people, so it’s easy to become complicit in shrinking our Information Horizons. A classic way to do this is with identity politics - put a label on a group of us and we will start to side with those people, even if we wouldn’t otherwise.

But while these labels and definitions shift easily, our allegiances to them don’t.

Comedians with strong politics have noticed this with their audiences over decades. Those who had ‘left-‘ or ‘right-wing’ audiences soon found they had a much wider spectrum of fans. When I wrote a deconstruction of the Facebook privacy policy three years ago, it was read by over a million people, but that came in distinct waves: startup people, then doomsday preppers and conspiracy-theorists, then NRA supporters, then a bunch of Europeans who translated it into their native languages, and then when Trump was elected, American liberals. They all had this interest and fear in common, but good luck inviting them all to a meetup to talk about it.

Labels isolate us and make us assume we have less in common.

At first, being a peacenik lefty myself, I was disappointed and embarrassed that my most popular post was popular with crazies and gun nuts. But then I realised it was a gift to be able to understand a whole group of humans out there. I started to empathise and respect NRA supporters even though I disagree with them. (I met some very rational, intelligent and educated people.) I could start to see their part of the world. So I started adding a wider range of sources to my feeds and when I searched. Counter-acting biases in big news players: BBC, RT, Reuters, FT, Al-Jazeera, SCMP. And also to respect journalists and indies when they performed deeper research and fact-checking, even when I didn’t agree with their politics.

It’s not always comfortable, and I have to remind myself this is a small price to pay for a world connected by empathy.

Remove filters

It might be tough to give up all that convenient, delivered information but remember that feeds don’t need to come with filters. RSS and blogs are still alive and well, and Twitter still gives you the option to turn off their feed prioritisation. (So you get every post, not just the ones that keep you on Twitter longer.) You can turn off Medium’s filters by accessing subscribing through direct RSS subscriptions rather than ‘following’. (It feels so much more genuine!)

It’s still filtered in a way, because you filter what you read based on what you choose to subscribe to, but you control the filter.

Expand your access to information

About a week before Catalonian referendum, I noticed the disinformation machine activate. So much bullshit on both sides. I got swept up in all the fast-paced Twitter ‘coverage’ - a lot of which was blatantly false.

So I started reaching out to Spanish people I knew, both from my short time living in Barcelona and my various startup activities in Madrid and Brussels, asking them for their experience and for guidance.

By that time, it was clearly such an emotional thing. That was clear in how they communicated (or reacted angrily that I would ask such stupid questions!). But in a few cases, people were able to respond to my questions with facts. That was really helpful for me.

If we’re lucky enough to have made personal connections all over the world, one on one conversations are so much better than one-to-many broadcasts. They’re more real and honest.

Starve information collection about you

I once thought that I could stay on Facebook and trick it so that it would get an inaccurate, or at last distorted picture of me.

Facebook uses a lot more than just your likes and posts to build a picture of you. They watch what you read (through like buttons on the web) and they use statistical models to derive information about you based on similarities to your friends.

On top of this, Facebook has the right to get your transaction information from your bank and your credit card. They’ve admitted to turning on users’ microphones to listen in to your life.

All of this information is difficult to manipulate and fake.

After spending a few months understanding machine learning, I realised that ‘faking’ doesn’t really work technically either. The algorithms that find useful conclusions about you - like your political leanings or your health status - are based on finding needles in the haystack of all this data about you. You can temporarily introduce other needles, but the real ones are still always there and always clearer.

The only way to stop this is to not provide the haystack. Hide it. The way to beat these systems is to starve them.

If you don’t want to be listened into, then you have to uninstall FB Messenger or physically cover your mic. (Black electric tape is good for this and isn’t noticeable.)

If you don’t want Facebook to get your banking information, you have to delete your account, thus revoking the right you grant them in the Terms Of Service.

Anonymise

There are a lot of easy tools out there that prevent systems from identifying you:

- VPNs and Tor block your IP address. These are easier than you think, and there are even free VPNs.

- NoScript and Privacy Badger block your browser fingerprint.

- Firefox Tracking Protection blocks ad networks and social networks from collecting information about you when you’re on other sites.

Those are easy, and come with only tiny inconveniences.

Mobile phones, on the other hand, are a free-for-all on your personal information. Aside from the insecurity of the devices themselves, phones are designed to identify the user. And app permissions are a joke too. Try to use the mobile web version instead of the native app if you can.

This starts to get into device security, but if you want to look into more secure phones, take a look at Replicant phones from Technoethical or Purism. I’m looking forward to the Neo900, which isolates the phone’s baseband from the main computer.

These days, I’m experimenting with the idea of no phone. I have a feature phone, and I often just leave it at home. Once I got over the mild anxiety of not being connected, I started to experience the world more in more vivid detail. It’s weird, but I started to hear more things when out for a walk.

Support EU Directives and organisations like Mozilla, EFF and FSF

A lot of these problems need to be resolved at a broader, political level. Next year, the EU is introducing a policy that will all citizens to insist that their personal data is deleted (but it’s not clear if that will include derived data.) This is a conversation you can join.

The EFF, Mozilla and Free Software Foundation have also worked to protect us from these problems for a long time. They increasing depend on financial support from donors. They’re worth supporting, not just because of their policy work, but because they build practical tools that you can use yourself. They can make your world a better place right away.

Build your house, build your home

Building your own house is a romantic notion, and it has it’s rewards, but also it’s costs. It’s hard work.

When I switched to Linux, it was a pain. But I did it because I wanted to learn and I wanted to use freedom-respecting systems. There were a lot of inconveniences and setbacks, but now, I have a powerful, customised system that I would never trade.

I got off Facebook years ago, and it came with its costs. But there are plenty of people who quit before me, and said how life good was. It took a while, but I got there. Now, when I see someone on Facebook, it looks so old-fashioned, like Yahoo, and when I look at the shit that they’re looking at, it seems like deluxe spam, or people being fake. My friend lost his dog a few months back, and felt he should load up his unused Facebook account to share the news, but when it came time to type, he realised this form of communication was actually too impersonal.

We have a lot better options, if we’re willing to do even a bit of work. You can get back a lot with just a little: a deeper sense of connection to people, a better understanding of what affects us, and regained self-determination.

Books & collected practices

- Peer Learning Is - a broad look at peer learning around the world, with a focus on practical program design

- Mentor Impact - researched the practices used by the startup mentors that really make a difference

- Decision Hacks - early-stage startup decisions distilled

- Source Institute - open peer learning formats and ops guides, and our internal guide on decentralised teams

Blog posts

Samo Aleko (2024)

Don't miss The Floop (2024)

Some kind of parent (2024)

Retreats for remote teams (2023)

What do you need right now? (2023)

Building ecosystems with grant programs (2021)

Safe spaces make for better learning (2021)

Choose happiness (2021)

Working 'Remote' after 10 years (2020)

Emotional Vocabulary (2020)

Project portfolios (2020)

Expectations (2019)

Amperage - the inconvenient truth about energy for Africa's off-grid. (2018)

The history Of Lean Startup (2016)

Get your loved ones off Facebook (2015)

Entrepreneurship is craft (2014)